SCFM: Shortcutting Pre-trained Flow Matching Diffusion Models is Almost Free Lunch

NeurIPS 2025

🔗 Resources

🚀 TL;DR

We introduce SCFM — a highly efficient post-training distillation method that converts any pre-trained flow matching diffusion model (e.g., Flux, SD3) into a 3–8 step sampler in <1 A100 day.

💡 Key Contributions

- Velocity-space self-distillation: Operates directly on the velocity field to enforce linear trajectory consistency across timesteps.

- No step-size conditioning needed: Unlike Shortcut Models (Frans et al. 2025), SCFM works on standard pre-trained FM models out-of-the-box.

- Few-shot distillation: Achieves competitive results with as few as 10 training images — the first successful few-shot distillation for 10B+ parameter diffusion models.

- Ultra-fast training: Distills Flux.1-Dev (12B) into a 3-step sampler in under 24 GPU hours using LoRA.

📊 Main Results (Flux.1-Dev → 3 Steps)

| Method | Steps | Latency (A100, s) | |ΔFID| ↓ | FID ↓ | CLIP ↑ |

|---|---|---|---|---|---|

| Flux-HyperSD | 3 | 1.33 | 1.52 | 9.65 | 31.95 |

| Flux-TDD | 3 | 1.33 | 4.46 | 8.26 | 31.38 |

| Flux-SCFM (Ours) | 3 | 1.33 | 1.01 | 6.34 | 33.10 |

| Flux-Schnell (Official) | 3 | 1.33 | 6.58 | 7.06 | 33.06 |

SCFM achieves the best FID and CLIP scores among 3-step distilled models — and does so without adversarial distillation (ADD/LADD).

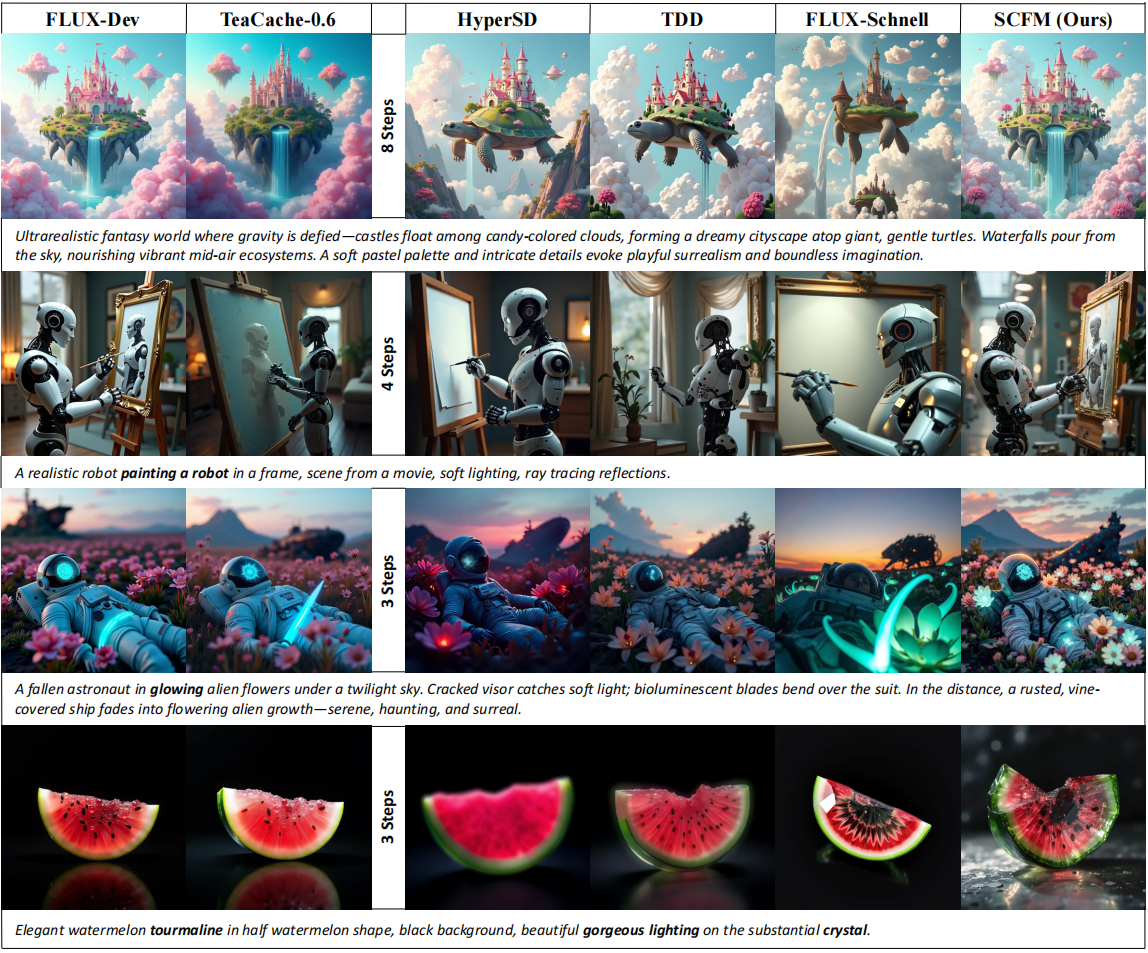

🖼️ Visual Comparison

📦 Get Started

- ✅ Compatible with any pre-trained flow matching model (Flux, SD3, etc.)

- ✅ Uses LoRA for parameter-efficient fine-tuning

- ✅ No need for large datasets(self generated) — works in few-shot regime

- ✅ Inference uses standard ODE solvers(flow match) — no architectural changes

🔗 Resources

📬 Contact

Questions? Reach out to the corresponding author: caitreex@gmail.com

arXiv

arXiv